Autonomous Vehicles: Moral against the Machine ?

Jose Carpio-Pinedo, Advisor and Contributor at Urban AI, is a researcher at the Universidad Complutense de Madrid, a research fellow at the University of California, Berkeley, and a lecturer at Universidad de Salamanca’s MSc in Smart Cities.

What is going on with autonomous vehicles? A few years ago, driverless cars sounded like the next great revolution, but today other innovations like electric cars have caught the media spotlight.

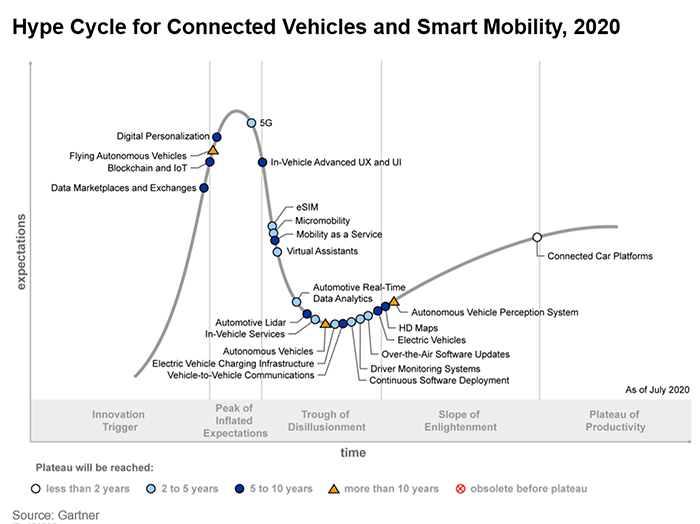

Checking Gartner hype cycle for 2020 and using its meaningful terms, we confirm that autonomous vehicles have already left the “peak of inflated expectations” and now stay “in the trough of disillusionment”.

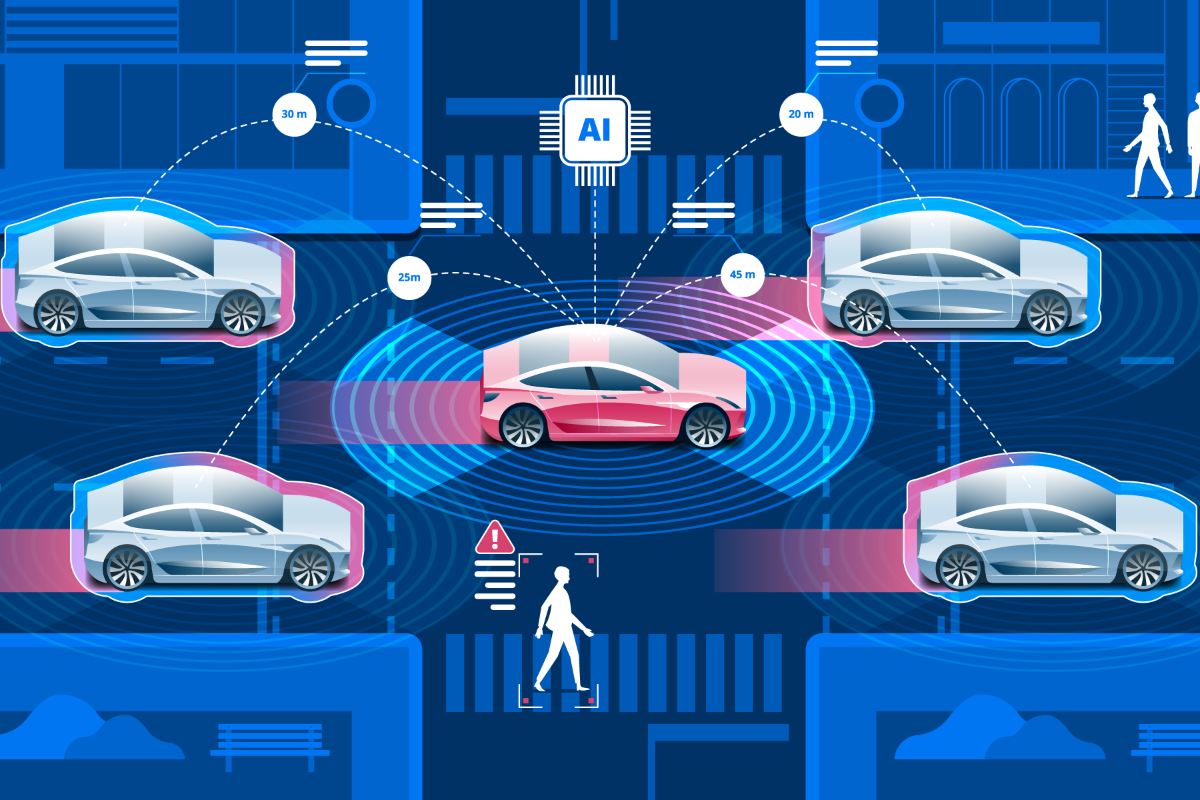

Inflated expectations — about what? The expectations were high indeed, and the promises of self-driving vehicles were many: thanks to a variety of sensors, the Internet of Things (IoT), and artificial intelligence, autonomous cars were designed to sense the road, perceive signs, obstacles and people on the street, keep safe distances, adapt its speed and behaviour to regulations (never break the rules!), receive information on congestion, modify its itinerary, identify vacant parking spots and park themselves. With over-human sensing and behaviour comes the possibility of reducing signage and road space for traffic and regaining it for pedestrians, bikes and leisure activities. At the same time, autonomous car passengers could spend their travel time reading a book, watching films, responding to emails or enjoying the landscape (like the wealthiest people when they hire a chauffeur!).

Enthusiasm led to the first real-world pilot projects, but also to the first problems. In 2016, Joshua Brown, a fan of autonomous cars, died on board of his Tesla, which speeded up and crashed into a truck. A year later, Tesla was found not responsible, given that the autopilot system had alerted Joshua Brown multiple times before the accident. In 2017, Uber launched a driverless service pilot programme in San Francisco, where autonomous cars ran several red lights. The following year, an Uber driverless car hit and killed a 49-yo cyclist woman in Arizona. As a result, a shade of doubt pushed autonomous cars to disillusionment.

Some of these errors could be analysed and fixed. At the end of the day, that is the path of science and progress: trial-error-trial-error-… Supporters of autonomous vehicles have claimed that, although imperfect, autonomous driving is still way safer than human driving. The potential reduction of traffic-related fatalities has been estimated at 585,000 lives from 2035 to 2045.

However, the nature of the remaining problems is not only technical but also ethical.

A great dilemma has remained until our days: whose life must autonomous vehicles save, in case of having to kill one person or another? This type of situation is not considered rare in road safety. Eventualities like brake failures or sudden pedestrian jaycrossing may force a car to choose between: a) not stopping and killing somebody or b) swerving, saving the pedestrian’s life but perhaps killing the car occupants or other road users.

In 2016, Bonnefon, Shariff and Rahwan found a striking paradox: “even though [survey respondents] approve of autonomous vehicles that might sacrifice passengers to save others, respondents would prefer not to ride in such vehicles. Respondents would also not approve regulations mandating self-sacrifice, and such regulations would make them less willing to buy an autonomous vehicle.” An impossible business, apparently. Autonomous vehicles and their AI system must incorporate moral principles, which have become perhaps the biggest obstacle. In an often heartless world, our moral principles have stopped the implementation of autonomous mobility. But why do we ask machines to be more perfect than humans? The essential difference is that human decisions are just sudden reactions, and not premeditated decisions. It seems we are ready to sympathise with human sudden reactions and forgive even if they are not the best. However, will we forgive machines designed to favour and discriminate according to certain characteristics?

What if the whole of humanity decides these moral principles democratically?

“Welcome to the Moral Machine! A platform for gathering a human perspective on moral decisions made by machine intelligence, such as self-driving cars.

We show you moral dilemmas, where a driverless car must choose the lesser of two evils, such as killing two passengers or five pedestrians. (…) You can then see how your responses compare with those of other people.”

“Start Judging”

That intriguing short text welcomes users from all around the world at the MIT Media Lab’s project Moral Machine, and invites you to develop your god complex, play King Solomon and decide on the death and life of people in the expected-near future of autonomous cars. By showing you pairs of pictures, the project asks you to choose some lives over other lives. One kid or one elderly? Two elderly or one kid?

The number and age of people are not the only factors. Some people are in the car -driving or passengers-, others are pedestrians. Some are male, others are female. Some are wealthier, others are homeless. Further, traffic lights may be green or red, so a question of responsibility and misbehaviour is involved too.

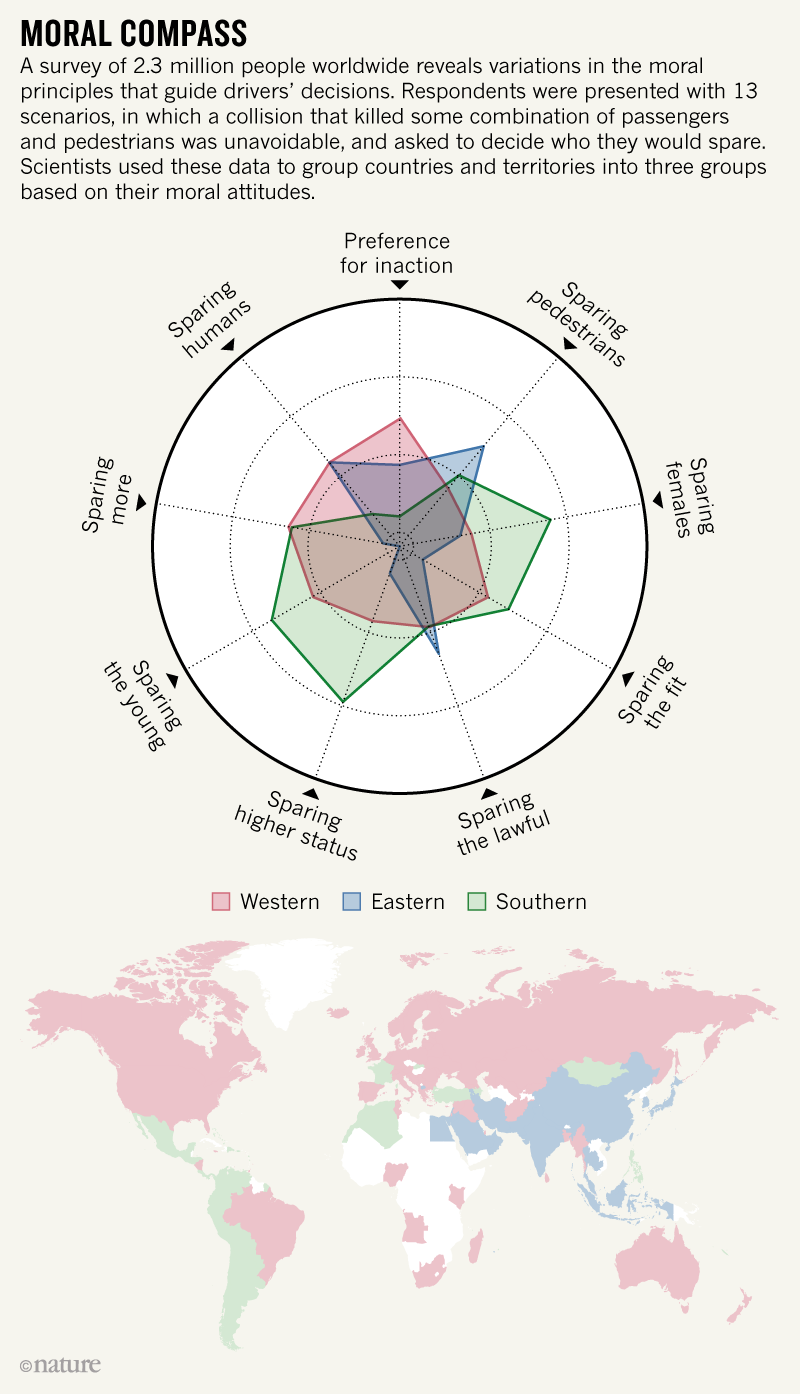

The project “gathered 40 million decisions in ten languages from millions of people in 233 countries and territories” and its results were published in the top academic journal Nature (2018). According to this study, although multiple moral mindsets exist in the world, some “universal moral principles” are generally shared. Almost everyone agrees on three main principles:

- Valuing human lives over animal lives;

- Minimizing the number of deaths

- Prioritizing younger lives over older ones.

A hierarchy of survival-deserves emerged rather directly from the project results . For example, a baby on a pushchair must be saved over a pregnant woman, while the eldest and the homeless individuals would be discriminated against.

However, in deep details, the Moral Machine project has also found differences across world regions. Although the Moral Machine respondents’ personal attributes like age or gender seemed rather irrelevant for their final decisions, their religious beliefs did play a role and caused geographical differences. In general, traditionally Christian nations would follow criteria that traditionally Buddist countries would not share. For example, Asians are more likely to save old ladies, while Europeans and North Americans tend to save athletic people over the obese. Following these differences, the Moral Machine team has identified three types of countries (see image below).

Discriminations like classism and ageism dominate human decision making, but “do people truly want to live in a world with sexist, ageist and classist self-driving cars?”. That is the key question for authors like Bigman and Grey, who claimed that allowing inequality or forcing equality must be a structural first decision underpinning the autonomous vehicle moral system, and this was neglected in the Moral Machine project.

The approach of Germany Ethics Commission’s report on automated and connected driving (2017) also underscores that autonomous vehicles must not replicate humanly flawed behaviours. Ethical (and political) principles must prevail: “In the event of unavoidable accident situations, any distinction based on personal features (age, gender, physical or mental constitution) is strictly prohibited. It is also prohibited to offset victims against one another”.

A social consensus on all these issues is necessary. If our societies have been able to develop and agree on constitutional principles and even proclaimed a Universal Declaration of Human Rights, can we not think of moral principles for autonomous cars, and technological innovations in general?

This article is also available on our Medium : https://medium.com/urban-ai/autonomous-vehicles-moral-against-the-machine-d43e29056d10

By Jose Carpio-Pinedo